What can we learn from past behaviour?

I spend a lot of my time thinking about how we can use and understand the data we have for each player. How can we look at their performance on past questions to best choose what questions to show them next? How can we try to understand when they got a question right (or wrong) if it was because they knew the answer versus they made a lucky guess?

These are questions that many edtech companies struggle with. And they're also questions that some edtech companies like to proclaim they've solved. But I don't buy that. From my experience, both using others apps and in working on my own solutions, this problem is much harder than we ever want to admit to.

A few weeks ago, I stumbled across this tweet:

Happy Thanksgiving

— Daniel Vassallo (@dvassallo) November 25, 2021

About 10yrs ago, this section from The Black Swan changed how I see the world. Try not to be the turkey. pic.twitter.com/91LbRhffm8

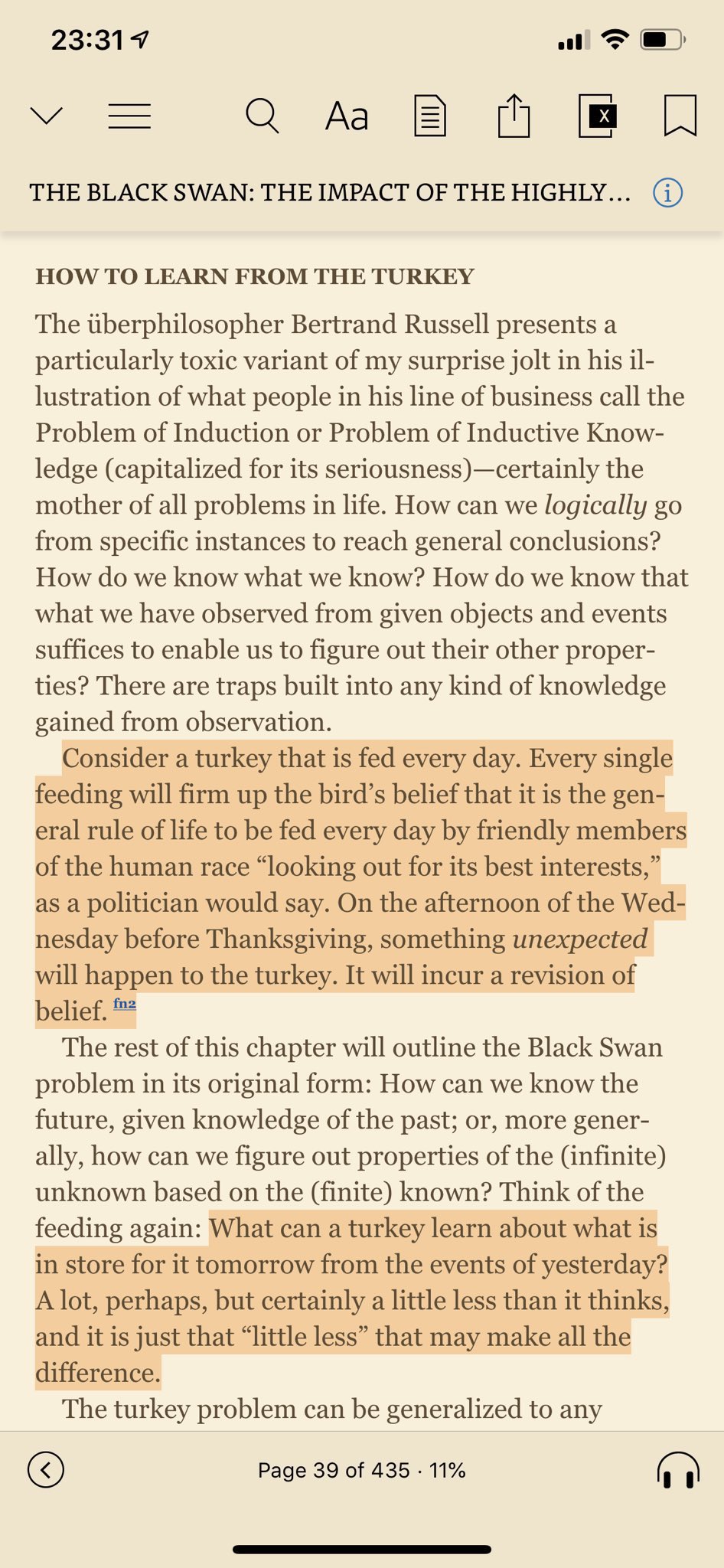

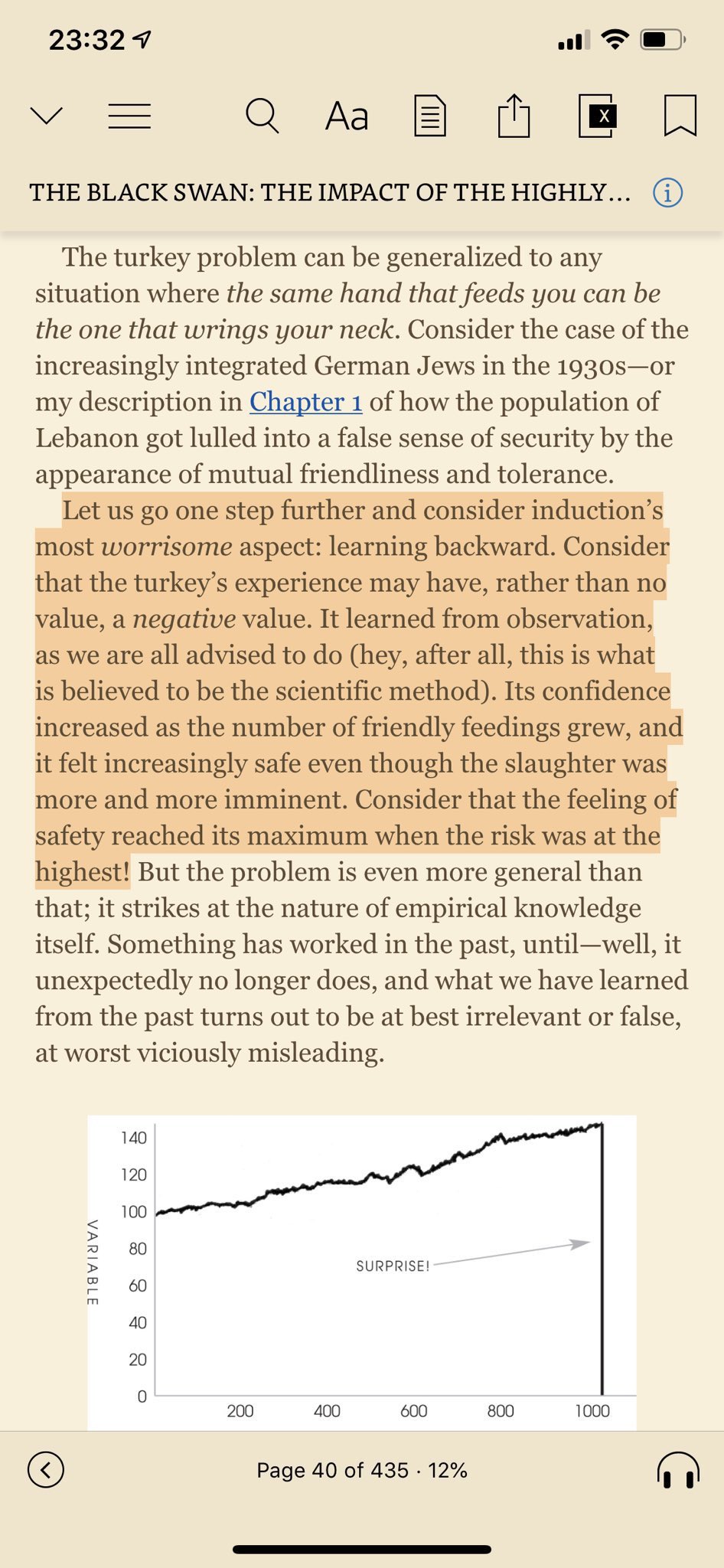

I've embedded the two images from his tweet below, so you can more easily read the highlighted portions.

I've been thinking about this tweet ever since. I'm diving into the learning engine that powers Dreamscape at work. I want and need to understand how it works so I can better understand and think through how we can improve it. We want it to deliver players passages that they will want to read and questions that they feel competent and able to answer.

Our best way to try and predict that is to use how they've done in the past. It's also the bulk of the knowledge we have about them. But just because we know how someone has done in the past, how well can we actually predict on how they'll do in the future? And I don't even mean if they'll get a question right or wrong, but that they'll even just give it a real attempt? There are so many factors to consider, and many of them out of our control.

The tweet also reminded me of one companies website I remember going through, where it claimed that they could predict how well students would do on a state test based on students having used their program for some given amount of time. A claim that probably was really motivating and exciting to teachers and administrators. But if you read more carefully, it then said that its accuracy was 95%. That means for 5%, or 1 in 20 students, it was going to be significantly off. That's at least one student in most classes. Multiple students in every school. Considering how heavily weighted state exams can be (both on student performance but also teachers—and something I don't like), this error rate can have huge consequences.

Anyway, I don't have a bunch of great answers. Honestly, I don't really have any answers. But I do have a lot of questions. And a lot of thoughts bouncing around. And I'm really happy I stumbled on these passages as they will definitely influence how I proceed.

It's a good reminder that the moment you find yourself looking for an "easy" solution to a hard problem, you're ripe for being ripped off/swindled. And that we should just accept and enjoy the challenge of hard solutions. We're all better off when we have to work for what we get.